Insights from a multilevel meta-analysis on the effectiveness of dialogue-based CALL

Moderator analysis

Moderator analysisAbstract of the research methods and results

Dialogue-based computer-assisted language learning (CALL) systems allow a learner to practice meaningfully a foreign language (L2) with an automated agent, whether through an oral (spoken dialogue systems) or written interface (chatbots) (Bibauw, François, & Desmet, 2015). While various dialogue-based CALL systems have been developed and tested with learners against different evaluation schemes, individual evaluations have provided limited information on their effectiveness on L2 development (Bibauw, François, & Desmet, 2019).

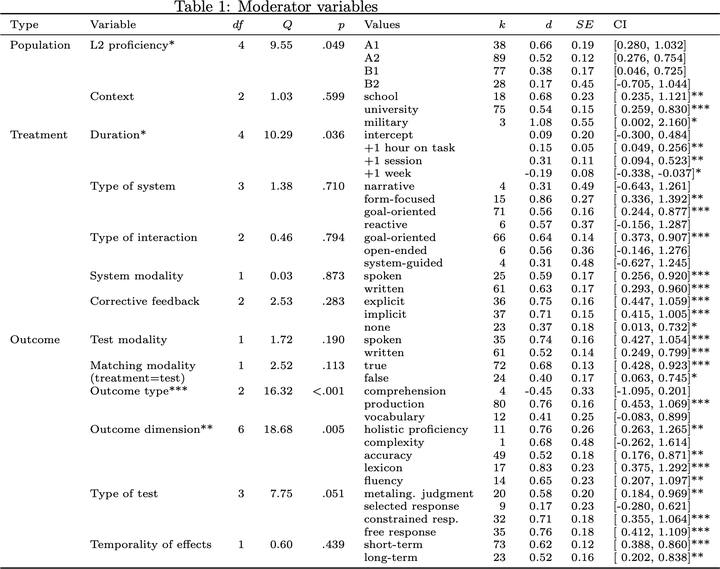

In order to obtain a better comprehension of their effects on L2 proficiency development, we conducted a meta-analysis on all the experimental studies measuring an impact of such systems on language learning outcomes (40 publications). Effect sizes for each variable and group under observation were systematically computed from the available data ($k = 96$). While most individual studies do not achieve significance in their results, often due to limitations in sample sizes and insufficiently sensitive outcome measurements, by combining all studies into a multilevel linear model, we observed a significant medium effect of dialogue-based CALL on general L2 proficiency development ($d = .602$).

By integrating moderator variables into our statistical model, we are able to provide insights on the relative effectiveness of certain design characteristics (spoken vs. written interface, task-oriented vs. open-ended interaction, form-focused vs. meaning-focused design) on different learning outcomes (holistic writing/speaking proficiency, as well as specific complexity, accuracy and fluency measures…), and to model the effect of treatment duration and spacing on these outcomes, to better inform future system and research design. In comparison with Bibauw et al (2017), we updated our pool of studies, clarified our exclusion process, refined the effect sizes calculation methodology and the multilevel modelling, and have computed a new mixed-effects model to take into account variables of treatment duration and spacing effects.

On the topic of “data in CALL research”

In the methodological steps required to conduct a meta-analysis, the openness of data (information) has a particularly striking impact: the meta-analysis is only possible if enough data is actually available, but the quality, clarity and standardization of the data is also directly responsible of the quality of insights the meta-analysis can provide. Beyond the obvious research data (experimental results, such as central tendency and variance measures) needed to compute effect sizes, and the already well commented need for their standardized reporting (Larson-Hall & Plonsky, 2015), there are also issues in the reporting of learners’ and pedagogical (meta)data, in particular in the nature and characteristics of treatments, whose precise features are rarely described in full, and even less in comparable terms.

Additionally, in the context of the need for more open data, we will release the complete dataset of studies used in this meta-analysis (as well as the source code of its statistical processing) on an Open Science Foundation repository, with provided guidelines for collaborative updating of this bibliographical database of dialogue-based CALL.

References

- Bibauw, S., François, T., & Desmet, P. (2015). Dialogue-based CALL: an overview of existing research. In F. Helm, L. Bradley, M. Guarda, & S. Thouësny (Eds.), Critical CALL – Proceedings of the 2015 EUROCALL Conference, Padova, Italy (pp. 57–64). Dublin: Research-publishing.net. doi:10.14705/rpnet.2015.000310

- Bibauw, S., François, T., & Desmet, P. (2017, May). The effectiveness of dialogue-based CALL on L2 proficiency development: a meta-analysis. Presented at the CALICO Conference 2017, Flagstaff, AZ.

- Bibauw, S., François, T., & Desmet, P. (2019). Discussing with a computer to practice a foreign language: from a conceptual framework to a research agenda for dialogue-based CALL.

- Larson-Hall, J., & Plonsky, L. (2015). Reporting and interpreting quantitative research findings: what gets reported and recommendations for the field. Language Learning, 65(S1), 127–159. doi:10.1111/lang.12115